One Simple Product Position Change Drove 27.6% Revenue Growth for Cybersecurity Leader

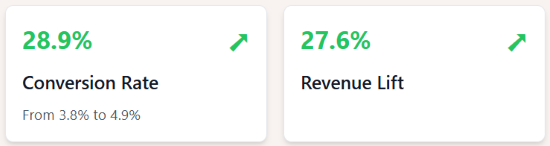

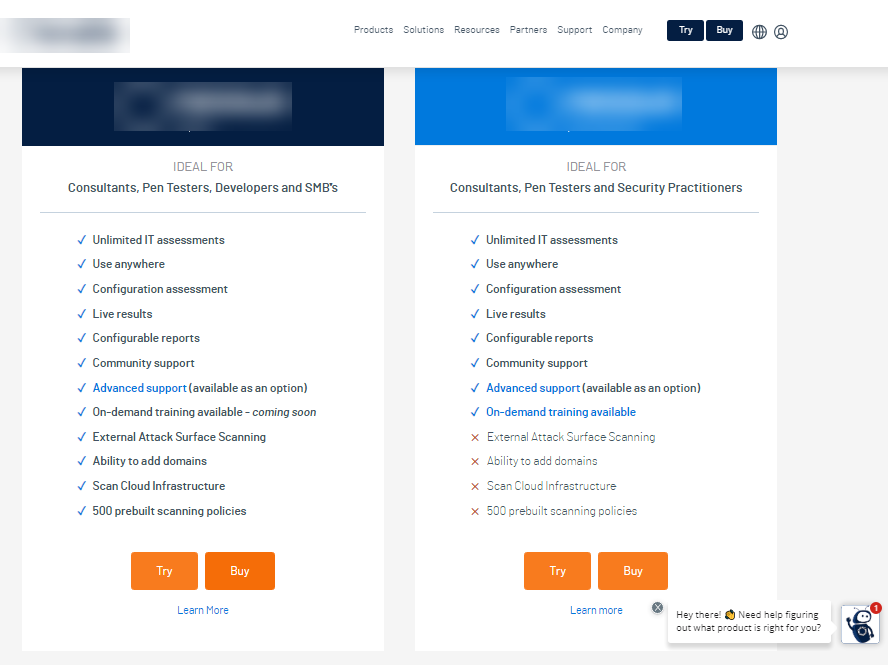

A leading enterprise cybersecurity company was struggling with suboptimal selection rates for their premium "Expert" version of their vulnerability scanner software. Many customers defaulted to the less expensive "Pro" version simply because it appeared first on the page.

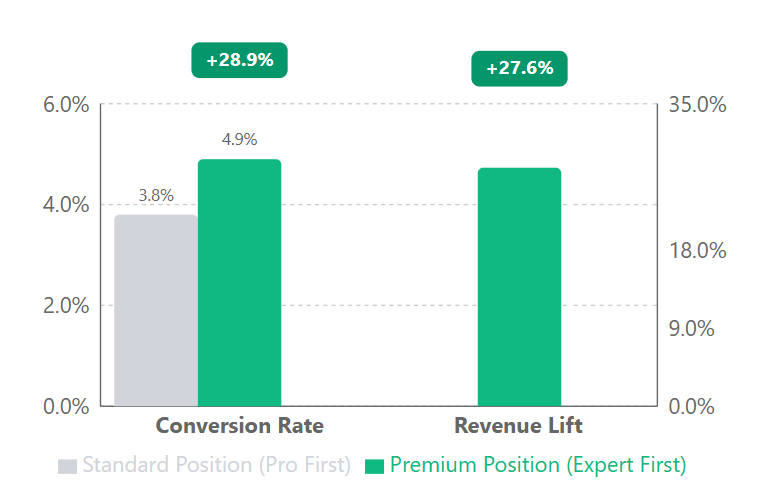

We implemented a straightforward test that swapped the positions of the two product offerings, displaying the premium "Expert" option before the standard "Pro" option. This simple change had a dramatic effect on purchasing behavior.

Our hypothesis was simple: By repositioning the premium product (Expert) before the standard product (Pro), we could increase visibility of the higher-priced option and drive more customers to select it.

The implementation was straightforward:

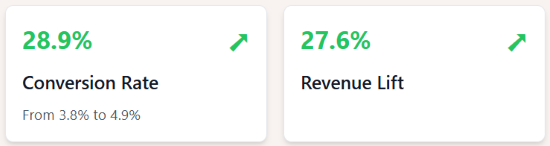

| Metric | Control | Variation | Lift |

|---|---|---|---|

| Users | 11,166 | 10,940 | - |

| Transactions | 428 | 535 | - |

| Conversion Rate | 3.8% | 4.9% | 28.9% |

| Revenue Lift | - | - | 27.6% |

While these results are impressive, we made sure to advise the client that they shouldn't count on a 28.9% conversion rate lift with a 27.6% revenue increase. Test period results can sometimes show exaggerated outcomes due to sample variability and the shorter measurement window.

What's most important here is that the test version performed statistically better than the control with 99% confidence. If you wanted a more precise estimate of long-term revenue lift, you'd need to run the test longer—but this comes with trade-offs. Longer tests delay rolling out the winning version, and in this case, the opportunity cost of waiting wasn't worth it given the clear statistical significance.

By positioning the premium option first, we created an "anchoring effect" where users calibrated their expectations based on the higher price point, making the standard option appear as a compromise rather than a default.

Want to see how our analytical approach can boost your conversion rates and revenue? Get in touch for a free strategy session where we'll break down your current funnel and identify quick wins for immediate growth.

Appendix: Detailed test parameters and statistical analysis

Test Parameters

| Parameter | Value |

|---|---|

| Devices | Desktop, tablet, and mobile |

| User Segments | All visitors to product page |

| Duration Criteria | Statistical significance or minimum of two weeks |

| Tracking | Unique promo codes for each product's CTA buttons |

| Variation Elements | Product display order only |

Statistical Analysis

| Statistical Summary Metrics | Value |

|---|---|

| Confidence level | 99% |

| Z-score | 3.85 |

| P-value | 0.0000597 |

| Conservative lift estimate | 22% |

| Sample size | 22,106 users combined |

| Test duration | 25 days |

| Participation rate | Not provided |

Field Notes

Field Notes